The NewViews database utilities consist of a number of operations that check data integrity, repair damage, improve performance by reorganizing data, and perform other miscellaneous database maintenance chores.

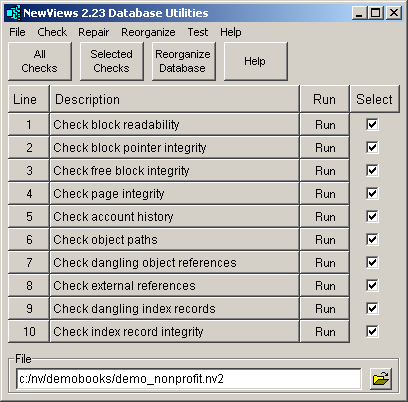

New operations are added to the database utilities as the need arises and so database utilities is an evolving repository for such operations. The main database utilities window appears as shown below:

Each operation can be run from the database utilities menu. Several are available in buttons and all check operations are also provided in a table so that you can select them and run them together.

In addition, all database utility operations can also be run from a DOS command line or batch file in order to take advantage of Windows ability to schedule tasks.

Each operation has a unique name that identifies it in progress windows, log files, and DOS command prompts. Examples are check_block_readability and reorganize_account_history. The names use underscores to avoid whitespace.

The operations fall into three broad categories: checking, repairing, and reorganizing. In this section the operations are provided in a table with a very brief description. In the next section more information is provided.

Be warned that this information is generally not accounting-related and it can be rather technical in nature. It is designed more for system administrators and customer support personnel than for day-to-day NewViews users.

Any of the commands below can be run at any time without fear of affecting the database in any permanent manner. The only exception may be the reorganize_audit_records_hibernate_all operation because it takes the audit trail off-line and although the information is not discarded, the ability to unhibernate it will be released in subsequent versions. Also, each operation can be aborted while in progress unless otherwise indicated.

| Quick Summary of Operations | ||||

| Operation | Description | Reorganize | Comment | Duration |

| check_block_readability | Checks checksums on all database blocks. This is the single-most important integrity check. | | run often | fast |

| check_block_pointer_integrity | Scans database file at the block level, checking low-level block pointers. | | not run very often | fast |

| check_free_block_integrity | Scans the list of free blocks. | | not run very often | fast |

| check_page_integrity | Performs a scan of the file at the file page level, which is a slightly higher "level" than the block level. | | not run very often | fast |

| check_account_history | Checks all account history numbers. Moderately quick. | | run often | reasonably fast |

| check_object_paths | Ensures that the path held in object matches the path that would be generated on demand. There were cases of incorrect paths following the restructuring of object, i.e. when re-parenting objects. If errors are reported run the repair_object_paths operation (i.e. issue the Repair>Object Paths), as a reorganize will not repair the problem. | Not included | not run very often | fast |

| check_dangling_object_references | Ensures objects exist if other objects reference them. There is no automatic repair facility for this type of error and a reorganize is unlikely to fix the problem. | Not included | not run very often | fast |

| check_external_pointers | This check ensures that pointers in application databases point to objects in the same database. If errors are reported run the repair_external_pointers operation (i.e. issue the Repair>External Pointers), as a reorganize will not repair the problem. | Not included | not run very often | fast |

| check_dangling_index_records | Ensures that objects exist if referenced from index records. | | not run very often | relatively slow |

| check_index_record_integrity | Ensures all records associated with objects are as expected. Only a reorganize_database can fix detected problems. Very long duration. | | run often | very slow |

| repair_free_blocks | Rebuilds the free list from scratch to ensure it's integrity. | | run when check free blocks report problems | fast |

| repair_dangling_line_numbers | Deletes line number records that reference non-existing objects. | | run once in a while | reasonably fast |

| repair_dangling_indirects | Deletes indirect records (blobs) that reference non-existing objects. | | run once in a while | reasonably fast |

| repair_dangling_double_indirects | Deletes double-indirect records (super-blobs) that reference non-existing objects. | | run once in a while | reasonably fast |

| repair_dangling_index_records | Deletes index records that reference non-existing objects. | | run once in a while | very slow |

| repair_object_paths | Forces index records to correspond perfectly with paths kept in objects. | | Run only if corresponding check_object_paths operation reports errors. Not corrected by a reorganize. | fast |

| repair_external_pointers | Only use on an application database. Finds any pointer field that points to an object in a different database. Any such pointers have their value set to null and that fixes the problem. | | run once in a while | fast |

| repair_transaction_amounts | Checks transaction amounts and automatically repairs any problems detected. This is similar to the index record integrity check except this command is restricted to transactions and also fixes the problems encountered. | | | |

| repair_interactive_indexes | So-called interactive indexes are the underlying indexes that allow you to rearrange many documents by moving line items (objects) around. For example, accounts can be moved around on reports, or invoice items can be moved around on invoices. This command performs a number of low-level checks on interactive index integrity and repairs any problems encountered. | | | |

| repair_floating_addresses | Only use if directed by customer support. | | | |

| repair_sessions | | | | |

| repair_dangling_window_objects | Only used to repair workstation and server database files. Not used for application databases. | | | |

| reorganize_database | Completely rebuilds all indexes. Very long-running operation. | | run when any check reports problems | very slow |

| reorganize_free_blocks | Defragments the free blocks and reduces database file size if possible. | | run after large deletion or after hibernating audit trail | reasonably fast |

| reorganize_audit_records_hibernate_all | Removes all audit record indexes to save space. Audit records are not deleted and can be unhibernated (ability to unhibernate expected in near future). | | run periodically to reduce database file size | fast |

| reorganize_account_history | Rebuilds all account histories from transactions. Takes a while but much less than check_index_record_integrity or reorganize_database. | | Run when check account history reports problems. | fairly slow |

| reorganize_transactions | Rebuilds transactions. The transactions are selected from a date range specified in a dialog window. Takes a while but much less than reorganize_database. | | Run when a bug is reported due to problems with a specific transaction. For example, you get a bug when you attempt to edit or delete a specific transaction. Reorganize the transacitons on a date range containing the problem transaction. | reasonably fast |

It is a good idea to run all of the check operations from time to time. As a diagnostic tool, you may be asked by Q.W.Page customer support to run check operations, especially if problems arise.

However, the most important checks to run are check_block_readability and check_index_record_integrity. If either of these fails you can call customer support for additional instructions. However, as a first line of defense, customer support will most likely recommend that you run the reorganize_database operation.

Checks checksums on all database blocks.

This check loads every block in the database and tests its internal checksum.

This is the single-most important check you can run on a database. Checksum failures are almost always caused by some form of computer hardware failure. This failure is often in the computer memory itself, or in buffers used to transport data to/from the disk or network. Hardware failures in turn tend to occur in a systemic fashion and although apparently random in nature, will tend to recur. Recurring checksum failures indicate that computer hardware replacement is warranted in order to guarantee data integrity.

Checksum failures result in the worst form of corrupted data because corruption is apparently random and can hit any data in any way. However, corruption can often be corrected by the reorganize_database operation because that operation accesses the least amount of data necessary to rebuild the database. If you are fortunate, the corruption may simply be by-passed by the reorganize operation if the checksum problems occurred in non-necessary data (i.e. in indexes for example) that are not copied but instead are rebuilt from scratch by the reorganize. So when check_block_readability fails you can issue the Reorganize>Database command to (hopefully) fix the database. If that fails, then further help from Q.W.Page customer support is necessary.

It is important that checksum problems be corrected as soon as they are detected. Your database is at risk and when you back up you may simply be backing up corrupted data which in the end will not help the situation. Note that when you use the NewViews File>Backup command checksums are automatically checked and NewViews will not back up a database file that contains checksum errors. See File Backup Command.

Scan database file at the block level, checking low-level block pointers. This check loads every block in the database and checks that internal pointers are set correctly to corresponding blocks in page block lists and the block free list. This check is not as important as block_readability and is usually performed as part of a general diagnostic or when there are other indications of integrity problems in the database.

Performs a scan of free blocks. If this operation reports errors, you can issue the Repair>Free Blocks command to fix them.

Performs a scan of the database at the database page level, which is a slightly higher level than the block level. This check loads every internal database page in the database file, performs basic integrity checks on it, and checks that it is connected as expected to all related pages.

See reorganize_account_history for a description of account histories. Checking account histories is relatively important but fast. On failure you can just run a reorganize_account_history operation.

This check loads each object in the database file and checks that the path store in the object matches the path generated on demand by the odb_path method. If the this operation reports errors, you can issue the Repair>Object Paths command to fix them. There is no automatic repair facility for this type of error and a reorganize is unlikely to fix the problem.

This check loads each object in the database file and checks the existence of every other object that it references. Each object references any number of other objects and the purpose of this check is to determine whether that these objects all exist. If errors are reported by this check you should send your database to customer support for diagnosis and repair. There is no automatic repair facility for this type of error and a reorganize is unlikely to fix the problem.

This check loads each index record in the database file and checks the existence of the object referenced by the index record. Each index record references an object and the purpose of this check is to determine whether that object exists. Usually dangling index records are a remnant of earlier bugs that (hopefully) have already been eliminated. If dangling index records have been detected you can run repair_dangling_index_records to fix them. Then run this check again to verify that the dangling records were actually repaired.

This check loads every object in the database and checks all index records associated with the object. This check typically takes longer than any other check operation. If any exceptions are detected you must run the reorganize_database operation to fix it. This check is one of the two most important checks you can perform (the other being check_block_readability).

Each database has an internal list of free blocks. Scenarios have occurred where the free block list has been corrupted. This operation scans the entire database file for free blocks and rebuilds the free block list from scratch, ensuring that the resulting free block list is not corrupt.

These check operations search for records that reference objects that no longer exist. Such records are called dangling records, and they should have been deleted. However, scenarios have occurred where such records were in fact not deleted. Although the cause of such corruption may have been eliminated (i.e. the original bug was corrected), the corruption caused by the bug remains until it is cleaned up, either by running this operation or by performing a reorganize_database. Each operation is different only in that it searches a different set of records in the database.

Note that there are many more index records than the other types, so this operation will take considerably more time.

This operation processes all objects and related data, rebuilds all indexes, and rebuilds account histories.

A database reorganize can fix a damaged database because it rebuilds the database from scratch, only accessing the minimum amount of data necessary in the original database. Checksum errors or other errors most often occur in non-essential areas that are not accessed by the database reorganize, and which are therefore not transferred to the reorganized database. As a side-effect, a database reorganize also reduces "internal fragmentation", and as a result there may be a slight reduction of database size and improvements in database access speed.

Ensures that path held in object matches the path that would be generated on demand from the indexes. There were cases of incorrect paths following the restructuring of objects, i.e. when re-parenting objects.

If errors are reported by check_object_paths, run the repair_object_paths operation (i.e. issue the Repair>Object Paths). A reorganize will not repair this problem.

Defragments the free blocks. What that means is that all free blocks are reorganized into a single contiguous area at the end of the database file. Then the file is reduced in size by the size of that free area. That is, the database file is chopped to a shorter length.

Note that this differs from repair_free_blocks which does not move any free blocks or reduce the size of the database file.

This operation takes the entire audit trail off-line. The audit trail actually remains in the database, but it is hibernated in a highly compressed form. Hibernating the audit trail frees up significant space within the database file, i.e. increasing the number of free blocks within the file, but it does not decrease the size of the database file directly. Run the reorganize_free_block operation to reduce the size of the database file. You can also run the reorganize_database operation to reduce the file size slightly further, although this operation takes a long time.

At present there is no way to "unhibernate" the audit trail but this ability should be released in upcoming versions.

From version 2.15 onward, reports containing accounts now use a mechanism called account histories rather than using account postings directly. This enabled NewViews to continue providing full daily resolution on reports while significantly reducing the number of postings indexes that have to be maintained. At the same time that account histories were introduced, the ability to enable/disable account postings indexes for any or all total accounts was also introduced and as a result database file sizes were significantly reduced. (The summary postings functionality was also removed in version 2.15 as it was no longer necessary.)

Note that when you run the reorganize_database operation, part of that operation is to reorganize, i.e. clear and rebuild, the account histories. However, the reorganize_account_history operation also enables you to reorganize the account histories by themselves; that is without rebuilding all objects and indexes as performed by a general database_reorganize operation.

If the check_account_histories operation reports exceptions, you should run reorganize_account_history and then try a reorganize_account_history again. If that fails then a full reorganize_database operation is necessary.

NewViews manages a text-only log file to track important (non-accounting) operations performed on databases. This includes all operations performed by the database utilities. Each such operation appends a log record to the log file. The log record identifies the operation, the database it was performed on, the date/time, the duration of the operation, the program version, and so on.

The name of the log file is nv2_log_2009.txt. It is kept in the NewViews installation folder so the full path to the log file will be something like: d:/nv/nv2_log_2009.txt.

Note that the current year is part of the log file name so that a new log file will be started with each calendar year. This tends to keep individual log files to a more manageable size. The log file is text-only so you can open it with any text editor or word processing program. The records resemble the example section of a log file shown below:

.log_record_1257949085_0 {

.command {

.name check_block_readability

.result succeeded

.runtime_hms 0:00:02

}

.computer {

.hostname benn

}

.database {

.file d:/nv/tutorial_216/database.nv2

.id 20070928162256

.type application

.size 2113536

}

.date {

.hmsdmy {09:18:05 11-Nov-2009}

.ymdhms 20091111091805

.epoch 1257949085

}

.exception {

.count 0

}

.program {

.nameofexecutable d:/nv/nv216.exe

.version 2.16.0.20091209

}

}

.log_record_1257949091_1 {

.command {

.name check_block_pointer_integrity

.result succeeded

.runtime_hms 0:00:02

}

.computer {

.hostname benn

}

.database {

.file d:/nv/tutorial_216/database.nv2

.id 20070928162256

.type application

.size 2113536

}

.date {

.hmsdmy {09:18:11 11-Nov-2009}

.ymdhms 20091111091811

.epoch 1257949091

}

.exception {

.count 0

}

.program {

.nameofexecutable d:/nv/nv216.exe

.version 2.16.0.20091209

}

}

.log_record_1257952675_7 {

.command {

.name check_index_record_integrity

.result completed

.runtime_hms 0:00:10

}

.computer {

.hostname benn

}

.database {

.file d:/nv/tutorial_216/database.nv2

.id 20070928162256

.type application

.size 2113536

}

.date {

.hmsdmy {10:17:55 11-Nov-2009}

.ymdhms 20091111101755

.epoch 1257952675

}

.exception {

.count 3

.file d:/nv/tutorial_216/database_check_index_record_integrity_20091111101755.chm

.list {

.1 {

.text {Simulated block error of some kind.}

.exception_id 314120091103142923

}

.2 {

.text {Simulated block error of some kind.}

.exception_id 314120091103142923

}

.3 {

.text {Simulated block error of some kind.}

.exception_id 314120091103142923

}

}

}

.program {

.nameofexecutable d:/nv/nv216.exe

.version 2.16.0.20091209

}

}

The fields are reasonably self-explanatory.

In each log record the operation is identified in the .name field. The result of the operation is presented in the .result field. For example an operation may have succeeded or failed due to an unexpected error. If the operation failed due to an error, then the error is provided in the .error field. Or the operation may have completed as in the third log record above. In that record the check_index_record_integrity completed (instead of succeeded) because detected problems (called exceptions) were detected. The exceptions are listed in the .list field and they are also provided in an exception file which is actually a Window help file (see Exception Files). The exception file is in turn identified by the .file field.

Note that programs other than database utilities may also append log records to the log file. For example, the File>Backup appends a log record. Other operations may add any fields deemed appropriate to the operation performed, but typical log records follow a protocol similar to that shown in the records above.

| Log File Record Fields | ||

| Field | Sample Value | Description |

| .command.name | check_index_record_integrity | Identifies the operation that was performed. |

| .command.result | succeeded | Operation result, i.e. succeeded, failed, completed, aborted, too_many_errors, etc. |

| .command.runtime_hms | 0:03:57 | Operation ran for this amount of time in hhh:mm:ss (hours:minutes:seconds) format. |

| .computer.hostname | COMPUTER05 | The operating system supplied name of the computer on which the operation was performed. |

| .database.file | d:/nv/demobooks.nv2 | The operation was performed on this database file. |

| .database.id | 20070607175816 | The internal database file identifier. |

| .database.type | application | The database type, i.e. application/workstation/server. |

| .database.size | 6307840 | Database size in bytes at the time the operation was performed. |

| .date.hmsdmy | 10:17:55 11-Nov-2009 | Date the operation was performed formatted as above. |

| .date.ymdhms | 20091111101755 | Same operation date as above but in a yyyymmddhhmmss format. |

| .date.epoch | 1257952675 | Same operation date as above but in number of seconds from beginning of epoch. |

| .exception.count | 0 | Number or errors, problems, fixes, or anomalies that were encountered. |

| .exception.file | | If exceptions occur, the exceptions are also written to this file. See The Exception File. |

| .exception.list | N/A | List of exception information records. This information may be of great assistance to customer support in identifying and correcting problems. |

| .program.nameofexecutable | d:/nv/nv2.exe | The NewViews program that performed the operation. |

| .program.version | 2.15.3.20090903 | The version of the NewViews program that performed the operation. |

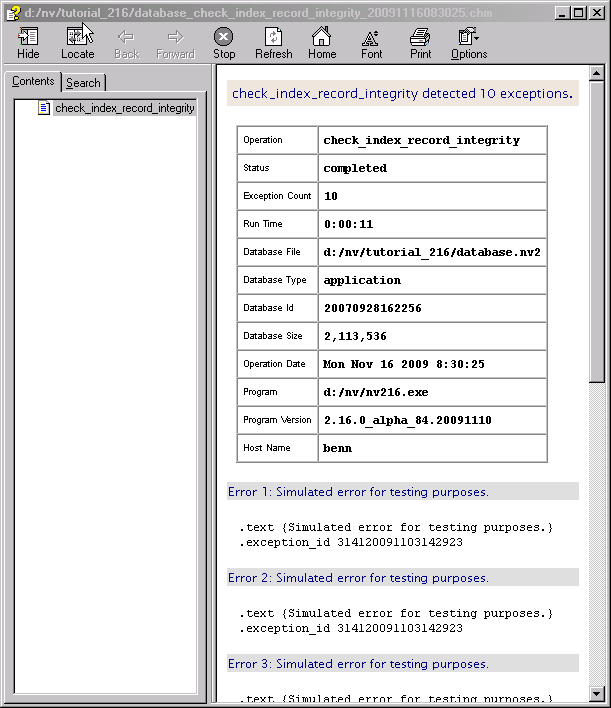

When exceptions occur, the list of exceptions is provided in a log file record, but in addition, the exceptions are also provided in a separate file called the exception file. .

An exception file is actually a Windows Help file, i.e. compiled HTML with extension .chm. It is pops up immediately after the operation is performed, but only if exceptions are actually encountered by the operation. Shown below is a Windows help window displaying the (simulated) exceptions generated by a check_index_records operation.

So exceptions are actually recorded in two places: the log file and the exception file. The exception (i.e. help) file can be used to print the exceptions in a nicely formatted form if desired. The help file has other useful features such as the ability to find text. Note, however, that a good text editor or word processor can also be used directly on the log file. Note that all exceptions for all operations are found together in the log file, but an exception file reports only the exceptions encountered by one operation performed on one database file.

Also, an exception file is created only if exceptions are actually encountered. In that case, the exception file is created in the same folder that contains the database. The name of the exception file begins with the same name as the database file, followed by a suffix that identifies the operation and date, and also which makes the file name unique. Thus, exception files are kept in the same folder as the database file, and the database file and all exception files associated with it will appear together when the files are sorted by name. And because exception file names are unique, they are never overwritten by subsequent operations.

Typically, once problems has been resolved and/or fixed, you can delete the associated exception files. On the other hand, you should keep the log file for possible inspection by Q.W.Page customer support.

Database utilities is actually a script that you can run in any of three ways:

Issue NewViews 2.0>Database Utilities from the Windows start menu.

It might help to know that the shortcut for this start menu item is:

d:\nv\nv2.exe .script d:/nv/nv2.exe/system/database_utilities.qw_script

Scripts are usually in the host directory system but this script is located in a folder inside nv2.exe (which contains a Tcl virtual file system.) Point 2 below also uses this technique (i.e. the -script option) except NewViews is run from a command prompt instead of a start menu item.

Run nv2.exe from a command prompt using the .script option.

You can run NewViews in order to run a specific script. The path to the script follows the .script option. Although such a script is usually somewhere in the host directory system, the nv2.exe actually contains a virtual file system. The database_utilities.qw_script is actually located in the virtual file system and can be run from the command prompt as follows:

d:\>nv2.exe .script d:/nv/nv2.exe/system/database_utilities.qw_script

Here we are assuming that d:\nv\ is the installation folder.

You also run database utilities from the command line using the shortcut du as follows:

d:\>nv2.exe .script du

To keep things simple we will us the du shortcut from now on.

Issue Tools>Script Evaluate and select the script.

This method allows you to run the script from within NewViews. However, that really is not recommended at this time because you cannot run the database utilities operations on a database that is already open so there seems to be little benefit. However, this is apt to change in future versions.

Each operation can be run from the database utilities menu. This includes all check, repair, and reorganize operations. In addition each check operation is provided as a table row so that it can be run by clicking the row's <Run> button. Or, the row can be selected by checking the Selected column and all selected operations can be run in one action by issuing the Check>Selected Checks menu command or the toolbar <Selected Checks> button. You can also run all checks, whether they are selected or not, by issuing the Check>All Checks menu command or the toolbar <All Checks> button.

All repair and reorganize commands are run from the menu. In addition there is a <Reorganize Database> button in the toolbar.

The previous section showed how you run the database utilities script from a DOS command line. That method brings up the database utilities window from which any operation can be run interactively. However, you can also run any or all operations from a command line without bringing up the window at all. The command line below runs the check_block_readability operation on database d:/nv/tutorial/database.nv2.

d:\nv\nv2.exe .script du .command check_block_readability .database_path d:/nv/tutorial/database.nv2

That's a lot of typing and it seems that running operations interactively from the database utilities window is a better way to go. However, running operations from the command line is useful in Windows batch files, i.e. files with the .bat extension. That way you can set up a batch file to run any number of database utilities operations.

Above, the sample command line was used to run one operation on one database. However, you can also run multiple operations on a database by specifying a list of operations in the .file field. When doing this, the list of operations must be enclosed in double-quote characters.

d:\nv\nv2.exe .script du .command "check_block_readability check_index_record_integrity" .database_path d:/nv/tutorial/database.nv2

The command line above runs two operations on the same database, but any number of operations could have been specified. When the command line is getting quite long you can break it into several lines by using the batch file line continuation character, i.e. the "^" caret character. However, a better way to perform multiple operations is to list the operations in a text file and then spficiy that text file as follows:

d:\nv\nv2.exe .script du .command c:/nv/du_operations.txt .database_path d:/nv/tutorial/database.nv2

Running multiple operations from the same command line or text file has an advantage. It only requires running NewViews (and hence database utilities) once per database, instead of running NewViews for every operation on each database. If you are running many operations on many databases this can become significant. Also note that the progress bars will remain up for multiple operations on the same database and disappear only when all checks are finished for that database. When you run one operation at a time from the command line, the progress bar for that single operation will disappear.

Whenever NewViews uses a blue progress bar you can abort the current operation using the <Abort> button in the progress window toolbar.

You can abort an operation at any time.

You are always asked for confirmation before an operation is aborted.

The database is never harmed by an abort.

When an operation is running, the <Abort> button in the progress window can be used to abort the operation. You will be asked for abort confirmation, or you can resume the operation.

Some operations are all-or-nothing. In that case a red progress bar is displayed and it has no abort button.